From Prototype to Product: Launching Live-Service and Web3 Games

|

|

You’ve built a strong game prototype: players in closed playtests are having fun, your core gameplay loop holds, and the hook is immediately apparent. However, the next hurdle isn’t just polishing the game for release; it’s turning that game into a viable product that succeeds in a market already crowded with established live-service and Web3 games.

That means instrumenting analytics before you scale growth, designing an economy that doesn’t implode under real traffic of savvy users, planning a season cadence that doesn’t go flat within days of a new content release, shipping a monetization without eroding player trust, and more. All of this for one simple reason: you’re contesting players’ time and money (and both are limited), while the competition is global and already installed on the PCs and phones of your target audience.

This article is a problem-case playbook. Each section pairs a common pitfall with a real game example, then shows the usual signals you’d see in data, and a few possible fixes. Whether your game is straight GaaS or includes on-chain elements, the aim is the same: replace guesses for measurable systems, turn fun gameplay into retention, keep the economy stable at scale, and put your resources where they count.

Positioning and GTM Misread in a Saturated Genre

Problem

Launching into a saturated live-service market with the wrong price tag or no clear loop besides core gameplay is a fast way to burn all your work. You’re competing both with evergreen titles and sudden trend spikes (a recent hype flashpoint is Bungie’s Marathon and the broader wave of extraction shooters). If players can’t answer a simple question like “why should I play this over other games?” in the first 10 seconds of your trailer and the first 10 minutes of actual gameplay, you’ve already lost.

Example: Concord

Concord from Sony is the cautionary tale in the live-service FPS market: little to no differentiation from other games in the genre and a pricing stance that didn’t match F2P titles and audience expectations. Players read it as another hero shooter with nothing they couldn’t already get for free, and the short launch window, with close to zero marketing, left no time to find a niche. Sony shut down the game in two weeks.

When the product story and the market reality don’t match, no amount of post-launch content can fix it. The only exception I can name is No Man’s Sky (which is a slightly different story). Hello Games moved mountains to fix a disastrous launch full of broken promises. But nine years later, the game’s in the best state it has ever been.

What to do

Diagnose it with hard signals:

- Low conversion from PDP impressions to wishlist to pre-purchase.

- Poor trailer CTR and completion.

- High early quit/uninstall rate within the first hour in playtests.

- Flat demo/prologue conversion.

- Creator/UGC content that underperforms outside your own channels.

- LTV that never clears realistic CAC.

The review sentiment will echo the telemetry: “generic”, “nothing new”, “expensive for what it is”, and similar feedback from potential players.

The fix is proper positioning plus proof of concept. Validate the gameplay loop and price in playtests before you scale spending. Ship a tight demo or prologue that lands the core promise, and seed it with niche creators whose audiences match your subgenre. Make the PDP do real work: precise tags, a short gameplay trailer that shows the hook in the first seconds, and a few killer screenshots.

Show receipts internally and to partners: impressions and conversion stats, creator-attributed clicks/sales, and first-launch retention/telemetry. If the math says your price is wrong, you need to adjust it, bundle your product, or move to a hybrid/F2P model. And you have to do it before the release.

Seasonal Model Alone Won’t Generate Player Retention

Problem

Adding more content isn’t the same as keeping people playing. Significant updates create a short binge: players rush in, clear the rewards, and their interest quickly fades. When seasons aren’t properly structured, rewards are front-loaded, and the loop doesn’t change, the gameplay turns into chores—repeat tasks for marginal gains.

The primary drive to log in shifts from enjoying the gameplay loop or, in Web3 titles, acquiring on-chain assets to dodging FOMO, which cheapens the experience and hurts post-content-drop retention. The player misses a few days and feels punished, or keeps up and feels bored. Social glue is thin too: without clear role goals or reasons to queue with friends, whole squads stop playing.

The result is predictable in behavior and metrics alike: an opening concurrent players spike, a sharp slide by week two or three, and a mid-season desert with participation clustering around the weekly reset.

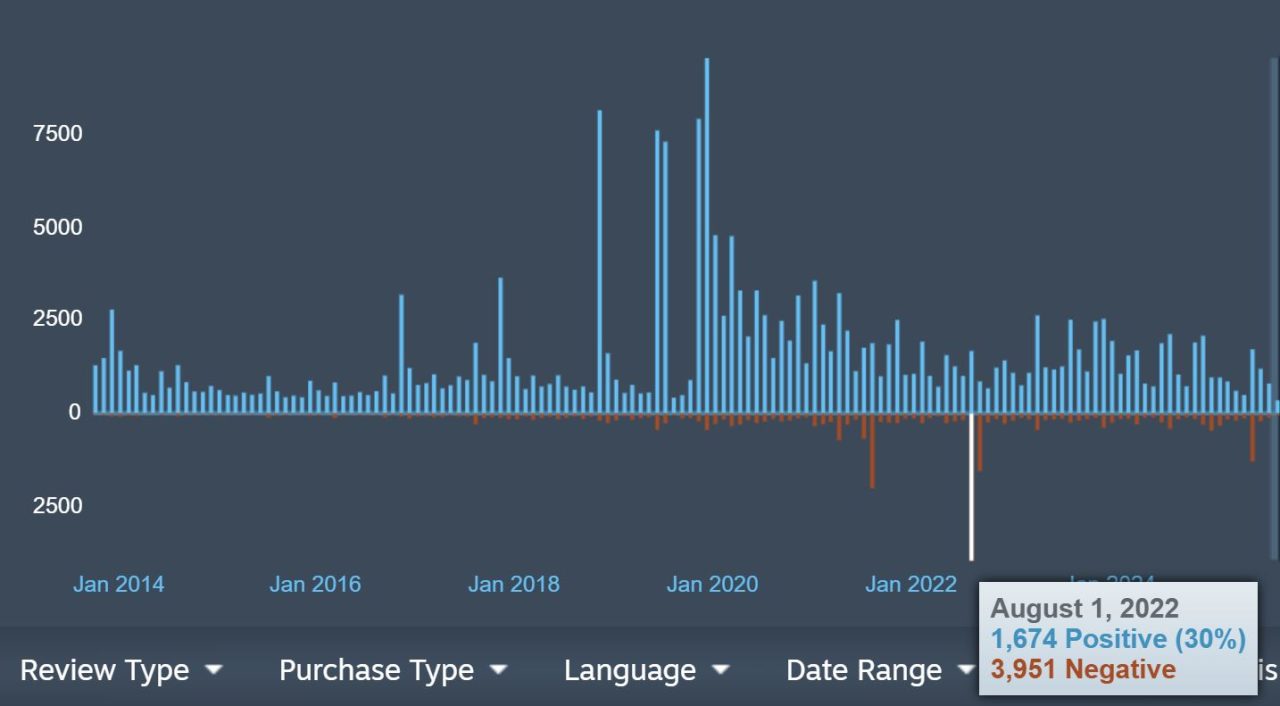

Example: Babylon’s Fall and Destiny 2

A primary case here is Babylon’s Fall. It had the live-service label but never built the core to hold people between content drops. Updates landed, players sampled the new activity, and the curve fell off quickly because there wasn’t a clear seasonal structure, fundamental objectives, or reasons to play with friends to make the next login feel necessary.

Queues slowed as concurrency slipped, and each content drop produced a smaller, shorter spike than the one before. The pattern was a launch pop, a steep week-two slide, and long stretches where events couldn’t lift the baseline. In the end, Babylon’s Fall has been shut down.

Another good example is Destiny 2. New Destiny 2 expansions pull in fresh players, and the opening weeks of a season are busy: new loop, reward chase, plenty of early sessions. But engagement settles once most players claim the early seasonal rewards or, with expansions, finish the campaign and clear the new content.

Engagement concentrates around the weekly reset; chores take over, and playlists loosen their grip once players get a good enough roll. The graphs echo it: strong week-one peak, a mid-season dip, shorter sessions, fewer fireteams, and a bump when the season finale lands.

What to do

The pattern is easy to spot. 1-2 weeks looks fine, but retention drops significantly after that. Play piles up on reset day, mid-track tiers stall, and sessions get shorter. Party rate slips as squads stop coordinating. In core modes, early exits rise once most players hit their obvious short-term targets. The feedback matches the charts: “finished seasonal quest, nothing else to do”, “back to chores”, “till next reset”, etc.

Why it happens is straightforward. Battle pass or quests front-load rewards, so the fastest players finish early and set the pace. Tasks collapse into checklists instead of changing how users play. Players miss a few days, and the progress track punishes it, so they swap to something else entirely. Social incentives are thin because there’s little reason to group up or bring friends. The main loop stays the same.

Fix the system

Simply shipping more content won’t help. Plan seasons with a built-in mid-season events that change how people play, not just what drops: week-long rotating modifiers/labs that push new tactics, a brand new short quest, or a reprised mission with a twist.

Add role-based activities or challenges to support player interactions and party play. Make party/clan bonuses meaningful, and line up limited-time modes with store rotations so there’s a reason to log in not only at reset. Add pass catch-up XP/streak protection so missing a weekend doesn’t feel like a reason to abandon the game. Put more time into SMM and community interaction. Prove you hear players and actually do something, instead of ritual patch notes about a niche staff no one will read.

Prove it in the data. Track weekly event participation, pass completion before/after catch-up, party-rate uplift during the mid-season beat, and DAU/quit-rate shifts in core playlists. Set a simple gate: if the mid-season change doesn’t lift participation and session length within a few days, roll it back and try a different loop change instead of just stacking more meaningless content.

Stealth In-Game Economy Changes Drive Players Away

Problem

When drop rates or reward values shift abruptly, players feel the rug pulled out from under them. The most significant gains are usually extracted by a small set of RMT farmers who can exploit the volatility, while everyone else watches their progress devalue overnight. That mismatch results in regular players quitting, leaving negative reviews, and lighting up forums and Discord with backlash.

In Web3 games, this hits harder: on-chain prices swing in minutes, bots jump on the gaps, and everyone sees it in real time, while players, developers, and the publisher take immediate, real-money losses.

Example: Path of Exile

Kalandra league (patch 3.19) in Path of Exile is a great example here: loot and reward changes landed without enough framing, the community reacted immediately, and the team was forced into hotfixes while sentiment slid. Once players believe the rules of the game are inconsistent, they stop playing and trading and start leaving.

Grinding Gear Games learned from the mistake: later PoE leagues didn’t repeat it, and Path of Exile 2 already feels healthier even in beta, based on player numbers and reviews of the latest major patch.

What to do

You’ll see the problem in the data almost immediately. Reviews turn negative compared to the prior season. Basic materials and crafting inputs surge in price, while end-game items stagnate, a sign of stalled progression. Liquidity dries as trade volume thins out, even as listings pile up. D7/D30 retention dips for new players, and session length shortens as seasoned veterans spend less time, burnt out by the changes.

One simple tweak won’t fix it. You have to win players back, not only undo the problematic patch. Announce the economic changes early with explicit targets. Ship patches with guardrails: retention floors that auto-revert key changes if new-player D7 drops beyond a threshold, and caps or sinks to keep inflation in check. Put economy updates in patch notes in plain text, and follow with a dated postmortem that shows what stayed, what reverted, and why so.

Show your hard work. Include before/after metrics, median wealth per active player, trade volumes, and clearance time for key items or resources. If you can, add simple time to target charts for specific in-game goals. The plan here isn’t to achieve a perfect balance on day one; it’s to demonstrate stability and a feedback loop that players can trust.

Ideally, you should catch these problems before the patch lands. Before you ship, stress-test the economy in staging. Replay real player logs against the new drop tables, simulate trade/crafting, and run a small NDA or public test. Watch D1/D7 proxies, sink/source ratio, median wealth, and how fast key items clear. Treat it like a release: a checklist, a rollback plan, and a live-ops kill switch. Better to spend a little more on QA or delay the content drop than deal with the blowback from a broken economy.

Gas Wars: Day-One Mint Failures

Problem

A poor mint plan and thin capacity modeling can wreck the first day of a brand-new Web3 game launch. When everyone mints at once, fees spike, transactions fail, refunds pile up, and the first experience is a queue of errors. The game can’t handle demand, and sentiment turns before the game even starts.

Example: Otherside

The Otherside land mint is the textbook example: an all-at-once rush drove fees to extremes, many transactions failed, and the conversation flipped from excitement to complaints in minutes. The aftermath was a visible loss of confidence that bled into how players and partners viewed the project.

What to do

Model the peaks and design the flow. Simulate at scale (wallets, bots, retries), then mint in stages with allowlists, per-wallet caps, and short windows that spread load. Use commit–reveal or Dutch-style phases to dampen sniping, sponsor/batch transactions where possible, and publish clear refund rules.

Watch a live dashboard: mint attempts per minute, success/fail rate, median/95th-percentile completion time, and fee bands by cohort. If failure or fee thresholds are breached, pause, widen the window, and resume. This should be backed by a plain-language post explaining what changed and why.

Bridge or L1 Dependency Risk

Problem

Relying on a single L1 or a bridge creates an expensive point of failure for a Web3 game. When that rail breaks (hack, outage, or halted withdrawals), the impact jumps straight into the game’s economy: tokens and NFTs go illiquid, prices gap, marketplaces stall, and planned beats slip because you can’t safely mint, trade, or settle rewards. Players can’t cash in or move assets, creators miss windows, partners freeze spend, and your roadmap suddenly revolves around incident response instead of new content.

Example: Axie Infinity and Defi Kingdoms

Two apparent examples show how fast this can propagate. The Ronin bridge hack hit economy of Axie Infinity beyond the theft itself: confidence and liquidity evaporated, and recovery took months. DeFi Kingdoms faced a similar shock after the Horizon Bridge exploit on Harmony. In both cases, the bridge/L1 failure forced redesigns of timelines, liquidity plans, and community promises.

What to do

Measure exposure and rehearse exits. Keep a treasury buffer in stablecoins to cover months of ops and make-goods; cap bridge exposure at the contract level; and alert on bridge queues, gas spikes, and confirmation delays. Track KPIs during calm and crisis: retained wallets 7/30/60 days post-incident, liquidity restored vs pre-incident (DEX depth, spread, slippage), on-chain GMV, marketplace listings revived, and time-to-resume core flows (mint/trade/claim).

Design for chain neutrality where possible: abstract chain calls in the client, keep a portable state (snapshot heights, token/NFT mappings), and maintain a migration playbook. Add circuit breakers (pausable contracts, per-block mint caps), multisig/admin hygiene with rotation, and a comms timeline (T+15 min acknowledgement, T+60 min plan, daily updates) so you can pause early, migrate cleanly, and prove recovery with real stats.

TL;DR: Proof Before Launch

The typical issues are clear: weak positioning and off-target GTM in a crowded lane; seasonal models that spike then stall without real retention mechanics; surprise economy shifts that break trust; gas wars at mint because capacity wasn’t modeled; and single-chain/bridge dependence that turns outages into roadmap slips. And there are about a dozen more primary problems; even more of secondary ones.

The fixes live in boring fundamentals: instrumentation before spend, guardrails on experiments, economy sinks and caps, mid-season loop changes, canary rollouts, and an incident plan you can actually run.

On paper, this sounds simple; in practice, it is hard. Turning a good build into a durable product takes disciplined analytics, transparent owner-by-owner processes, and the humility to change course when the numbers say so.

You still need proofs you can point to:

- New players should hit a fun moment fast on their first launch.

- They should want to come back tomorrow and the week after.

- Your store page and trailer should make sense to someone who’s never heard of your game.

- The in-game economy should hold its shape when real people start trading, min-maxing, and trying to break it (highest priority for on-chain games).

Publishers want that evidence, and many games are competing for the same limited spots. If you already have a solid prototype and early metrics, consider an accelerator as a pragmatic middle layer: they can help wire analytics, stress-test live-ops, and economy changes, provide infra credits and small funding, and open the right rooms with investors and publishers.

Game accelerators do not replace fundamentals nor the real publishing, but they can turn a promising build into a shareable proof, convincing for investors.