Anisotropic Filtering: In-Depth Guide to the Tech

|

|

Anisotropic filtering (AF) is the technology which improves texture clarity at angles, reducing blurriness and preserving detail in 3D computer graphics. In this article, we’ll dive into how it works, why it performs so well compared to other methods, and how you can tweak its settings for the best results. We’ll also take a peek at other texture enhancement methods and compare them to AF, so you’ll have a solid grasp on how to keep your games looking good without sacrificing too much performance. And if you have a specific question or an issue—check out the FAQ section for quick answers!

Table of contents

- Introduction

- Basics of Texture Filtering

- What Is Anisotropic Filtering

- How Anisotropic Filtering Works

- Benefits of Anisotropic Filtering in Games

- Anisotropic Filtering vs Other Texture Filtering Methods

- Performance Impact of Anisotropic Filtering

- How to Configure Anisotropic Filtering in Modern Games

- Alternative Methods for Improving Texture Quality

- FAQ

- TL;DR

Introduction

In computer graphics, one of the most crucial factors for creating a believable scene is texture quality. If you’ve ever noticed distant roads looking blurry or surfaces losing detail at steep angles, you’ve encountered the limits of basic filtering methods. Early techniques (bilinear and trilinear filtering) helped address some issues, but they often aren’t enough to maintain crisp visuals on angled surfaces.

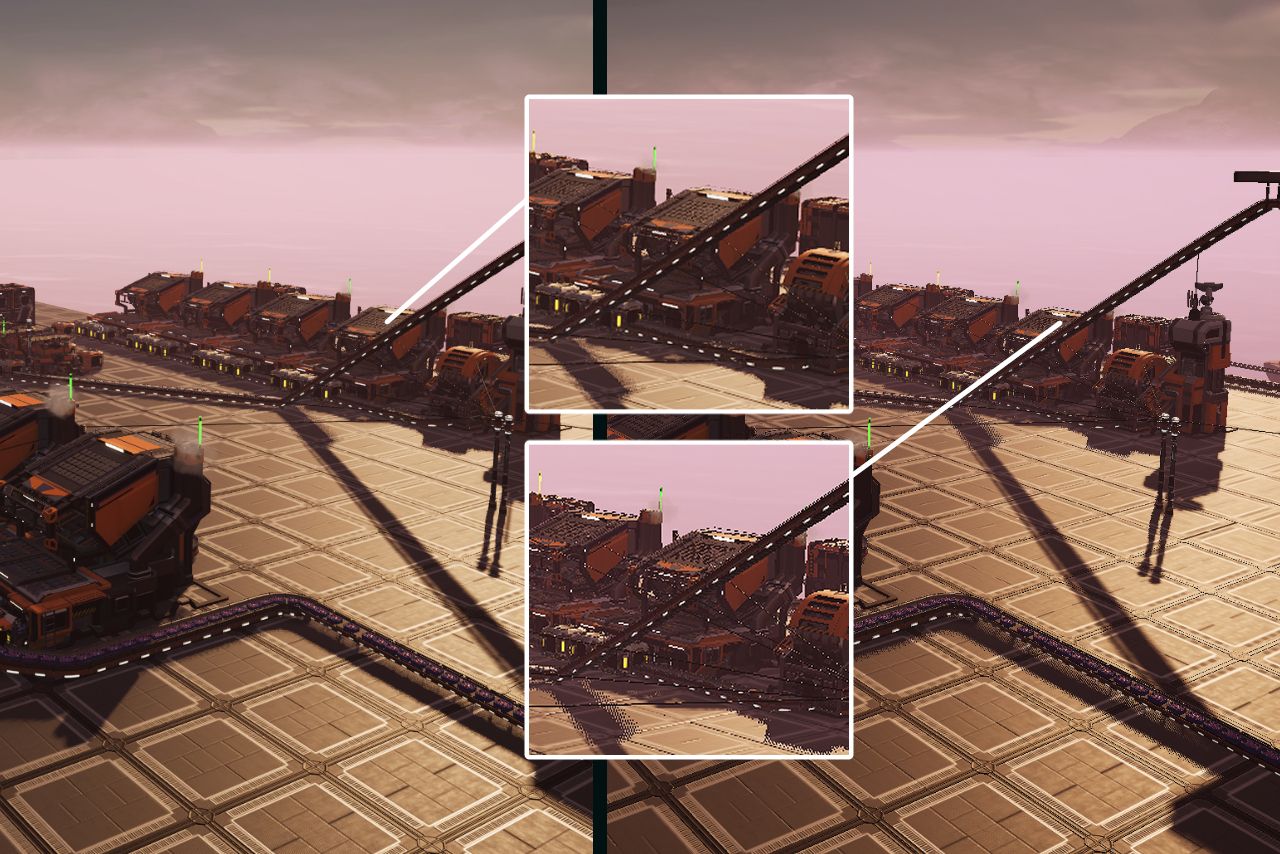

That’s why anisotropic filtering has become a staple in today’s game engines and 3D applications. To understand anisotropic filtering meaning in gaming, think of how roads or floors in 3D games often look blurry at a distance. Its main job is to ensure that textures remain detailed and sharp, even at extreme angles. Without AF, those long roads in your favorite open world RPGs would look muddy and washed out, and you’d lose a ton of detail on slanted walls when moving the camera around.

Basics of Texture Filtering

In 3D graphics, textures are 2D images that are projected onto an object’s surface to give it color and detail. However, when these textures are displayed on your screen, issues like scaling and perspective distortion can arise, especially if you move far away from the object or change your viewing angle drastically.

The simplest ways to handle these distortions are bilinear and trilinear filtering. Bilinear filtering takes the four nearest texels (texture pixels) and averages their values to determine the color of a single pixel on the screen. Simply put, it smooths out textures by blending colors from nearby pixels, reducing rough edges. Trilinear filtering goes a step further by interpolating between two different MIP (this term will be explained a bit further below) levels, which helps reduce the popping effect when textures change resolution with distance. In other words, it smooths transitions between texture details, making changes in quality less noticeable.

Mipmapping is another related concept. The game or engine pre-calculates multiple reduced-resolution versions (MIP levels) of each texture, selecting the appropriate one based on how far away the object is. This helps cut down flickering and shimmering in motion but doesn’t solve the blur you’ll see on surfaces at sharp angles.

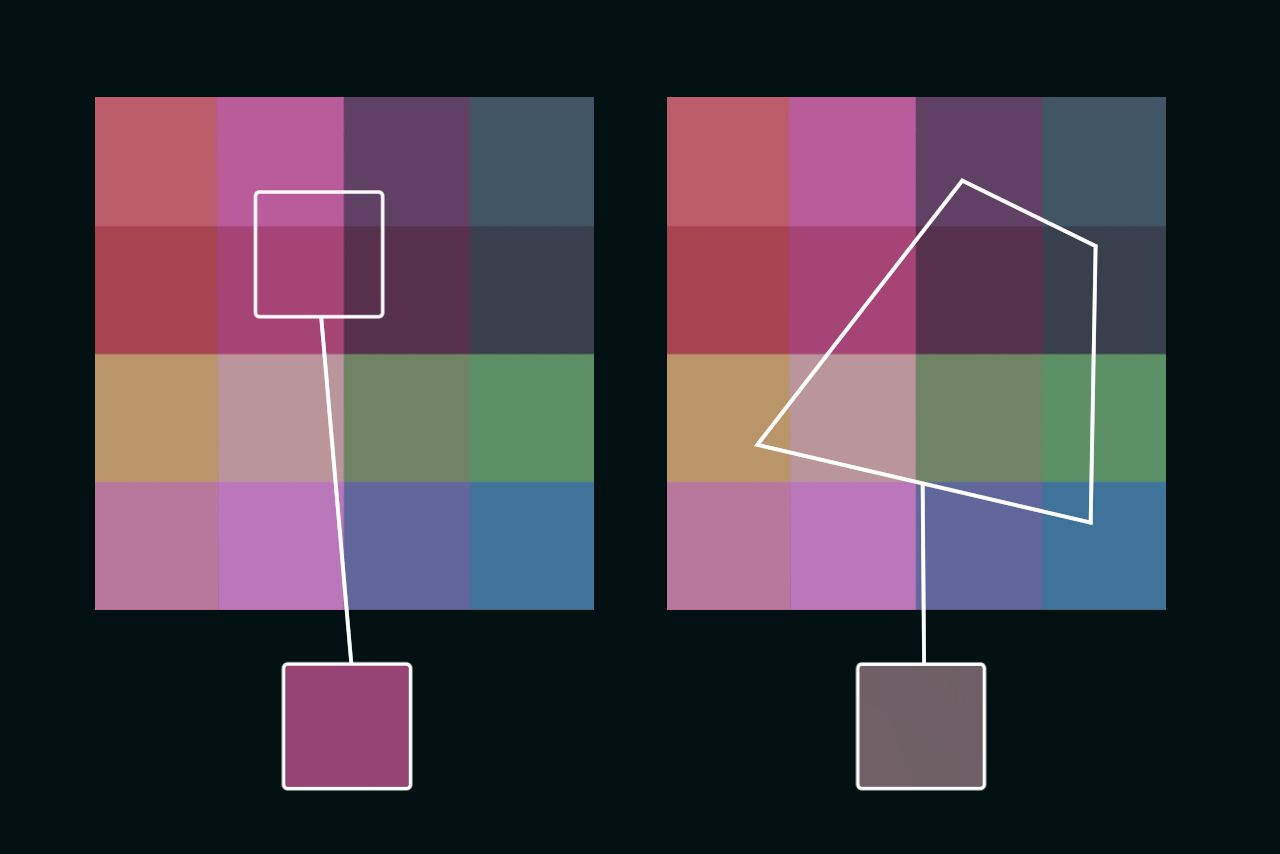

All these methods are isotropic—they apply the same level of blur in every direction. If a surface is tilted, part of the texture gets stretched more in one axis than in the other. An isotropic algorithm doesn’t consider that, which leads to a familiar blurry look whenever you view textures at an angle.

What Is Anisotropic Filtering

Anisotropic Filtering was created specifically to handle unequal texture stretching along different axes. “Anisotropy” literally refers to differences in properties depending on direction. In practical terms, an inclined surface could be squashed along one axis but stretched along another, and the filter needs to adapt to that distortion.

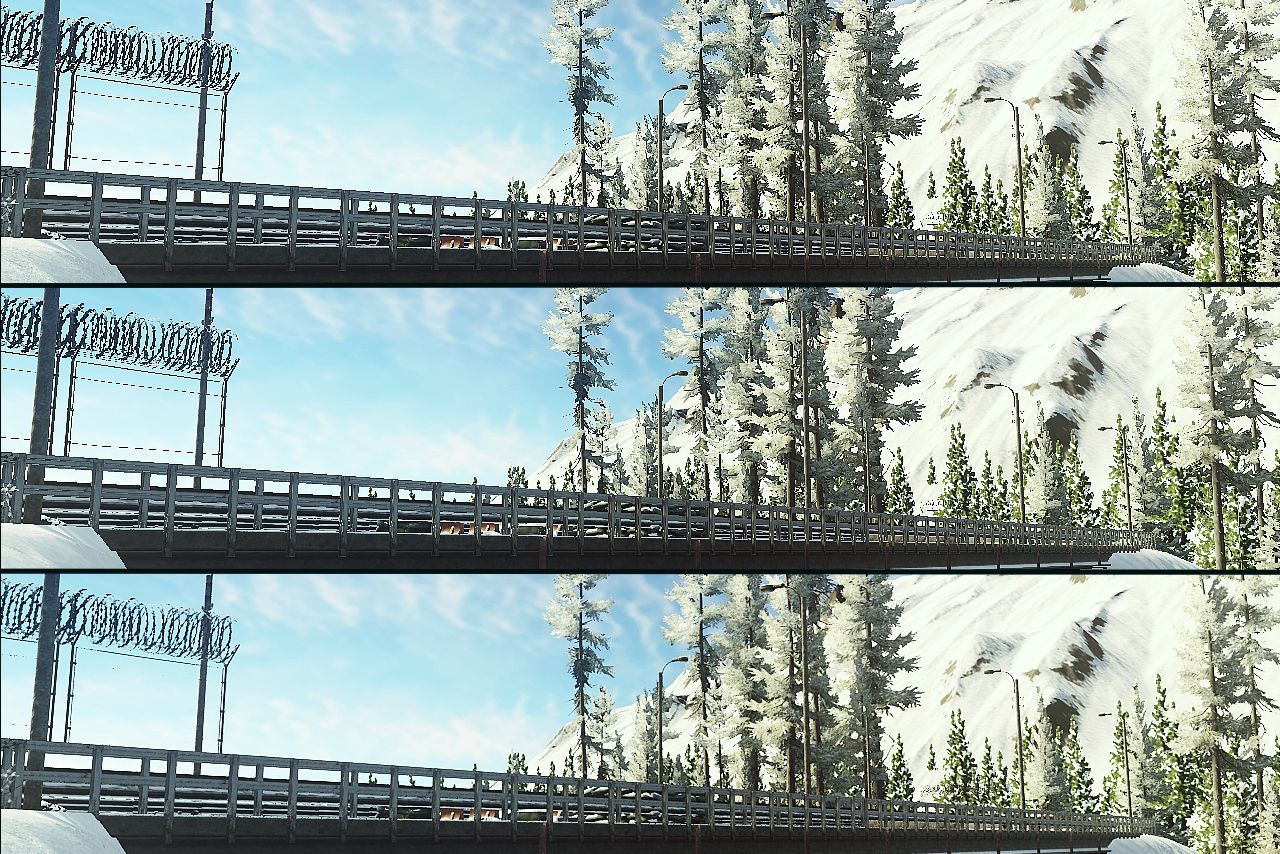

AF’s main goal is to keep textures looking sharp and detailed even when you’re looking at them from a steep perspective. Think about a paved road stretching out in front of you in an open-world RPG. Without AF, it’s just a blurry mess. With AF, you’ll spot road textures, pebbles, markings, and other subtle details that make the environment more immersive.

Modern game engines like Unity, Godot, and Unreal Engine (along with graphics APIs: DirectX, OpenGL, Vulkan) all support AF at the hardware level. This means your GPU does the heavy lifting, offloading work from the CPU, and providing a noticeable boost in clarity with minimal performance impact.

How Anisotropic Filtering Works

To see why AF preserves crispness, let’s break down the process. When a graphics card renders a polygon with a texture, said GPU needs to map texture pixels (texels) to actual screen pixels. At extreme angles, the texture may get stretched or compressed differently along each axis.

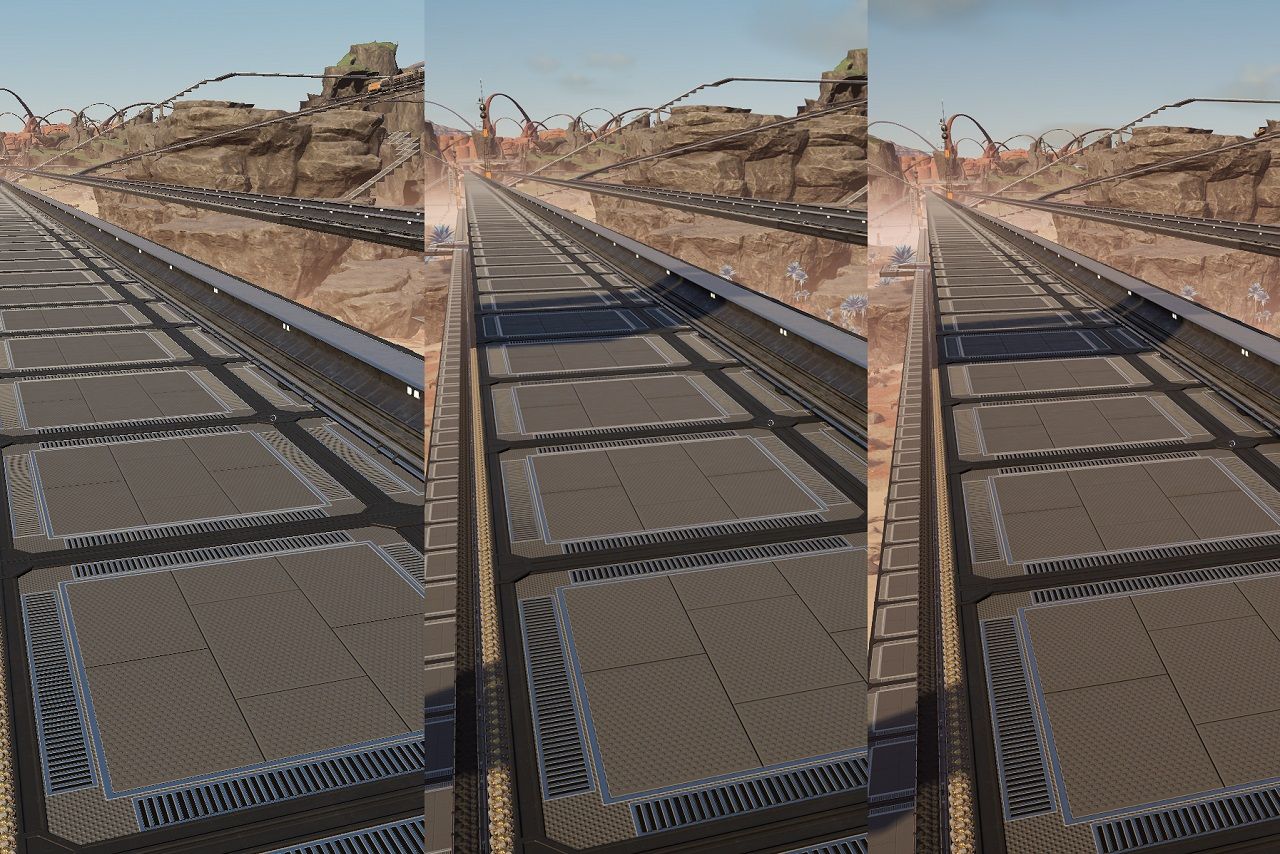

Your GPU calculates how much the texture is distorted on each frame by determining the anisotropic ratio (2:1, 4:1, 8:1, 16:1, etc.). The higher the ratio, the more stretched the sampling area becomes, and the more texels the GPU needs to check to properly render a single screen pixel. If you pick a 16x anisotropy level, the GPU can sample up to 16 texels per pixel, whereas trilinear filtering might only use two.

Isotropic algorithms often rely on square or circular sampling windows. AF, on the other hand, uses ellipsoidal filters that adapt to the tilt of the texture. This means the sampling area is stretched along the axis where the texture is stretched the most, avoiding unnecessary blur and keeping fine details intact. In short, AF continuously adjusts itself to a texture’s angle relative to the camera, ensuring much sharper visuals than isotropic methods can ever achieve.

Benefits of Anisotropic Filtering in Games

Modern games aim to fully immerse you in their virtual worlds, and textures play a massive part in delivering that immersion. Here are some reasons to love AF:

- Sharper details on slanted surfaces: from winding roads to sloped walls, surfaces remain crisp and easy to read.

- Better scene readability: it’s easier to pick out subtle details in the distance, whether you’re spotting enemies in a shooter or appreciating the artistry in an RPG environment.

- Fewer artifacts: properly implemented AF reduces the flickering or shimmer you might see on distant surfaces when only MIP maps are used.

- “Works on my machine”: AF almost always works well and is light on performance, making it a great complement to anti-aliasing, DLSS/FSR/XeSS, and other cutting-edge rendering techniques.

Anisotropic Filtering vs Other Texture Filtering Methods

For a complete picture, here’s how AF stacks up against other popular filtering techniques. Bilinear filtering is fast and simple, but it can leave textures looking very blurry at steep angles. Trilinear filtering—smoothes transitions between MIP levels, but still doesn’t handle angled surfaces well. Mipmapping is great for reducing flicker and popping, but it uniformly scales textures, ignoring perspective distortion. Ultimately, anisotropic filtering dynamically adapts sampling to surface angles, delivering the best clarity at the cost of some additional GPU work.

Isotropic methods aren’t fully outdated—hey’re still useful on weaker hardware or in cases where raw performance is a bigger priority than visual fidelity. But in most modern desktop games (especially AAA titles) anisotropic filtering is the standard because of its unbeatable clarity.

Performance Impact of Anisotropic Filtering

A big question is how much AF will affect your FPS. In the early days of 3D accelerators, pushing AF to 16x could tank performance. But nowadays, hardware has evolved dramatically.

Modern GPUs have specialized units that handle texture sampling. When AF is enabled, these units take care of it at the hardware level, offloading most of the extra work from the main rendering pipeline. As a result, 16x AF often only cuts performance by a few frames on mid-range or high-end systems—a relatively small price for a big visual upgrade in most games.

Choosing an AF Level:

- 2x or 4x—good for budget PCs or laptops where performance is tight.

- 8x—the sweet spot, balancing higher than usual quality and minimal FPS hit.

- 16x—maximum detail, ideal for powerful gaming rigs or when quality is the king.

In most modern games, gamers can safely crank AF up to 8x or 16x without noticeable performance dips. Only on older machines you might consider dialing it down a bit.

How to Configure Anisotropic Filtering in Modern Games

Some recent games let you enable AF under “Graphics Settings” or similar. You’ll typically see it listed as anisotropic filtering levels (2x, 4x, 8x, 16x), or sometimes as “Low”, “Medium”, “High”, or “Ultra”. The default often sits at 4x or 8x.

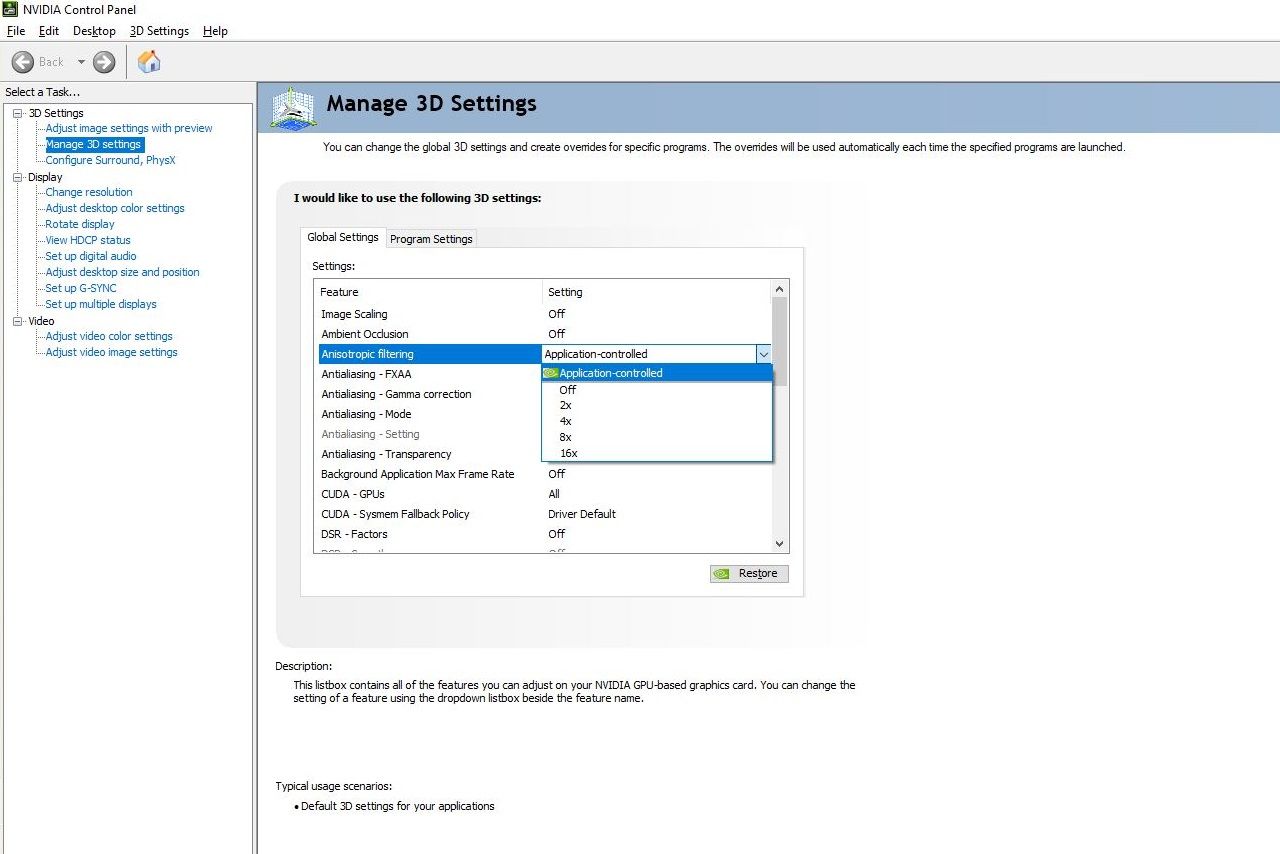

But a lot of modern games and game engines don’t provide that option, hiding it behind grouped options like “Texture Quality”. If a game doesn’t provide AF options, you can force-enable it in your graphics driver.

- For NVIDIA: go to “NVIDIA Control Panel”, “Manage 3D settings”, “Texture Filtering—Anisotropic Sample Optimization” and set your desired level.

- For AMD: use “AMD Radeon Settings”, “Graphics”, “Anisotropic Filtering” and choose “Override/Enhance”.

- For Intel: similar options may be available in the “Intel Graphics Command Center” (if supported).

And some recommendations for different hardware:

- Gaming laptops (with discrete GPUs): 4x–8x AF for a good balance.

- Desktop PCs with mid-range GPUs: 8x AF.

- PCs with powerful GPUs: 16x AF to squeeze out every last bit of detail.

Alternative Methods for Improving Texture Quality

Anisotropic filtering isn’t the only trick in the book for razor-sharp textures. Machine learning and smarter upscaling algorithms have also come a long way:

- DLSS (NVIDIA): a neural network approach that renders games at lower resolution, and then uses AI to reconstruct extra detail, boosting clarity.

- FSR (AMD): a non-AI upscaling technique that similarly focuses on performance gains with decent visual quality.

- XeSS (Intel): another super sampling technique aimed at Intel GPUs, leveraging specialized instructions.

Anisotropic filtering remains essential because it tackles the fundamental issue of how textures are sampled at steep angles, which other methods don’t always address directly.

FAQ

What does anisotropic filtering do?

Anisotropic filtering keeps textures crisp and detailed, making roads, walls, and distant objects look sharp instead of a blurry mess.

Is anisotropic filtering good for FPS or does anisotropic filtering affect FPS?

Anisotropic filtering has a minimal impact on FPS, as modern GPUs handle it efficiently, giving you sharper textures with little performance loss.

What is 16x anisotropic filtering?

16x anisotropic filtering is the highest AF setting, ensuring maximum texture sharpness at extreme angles, making distant surfaces look significantly better.

Is anisotropic filtering good for low-end PCs?

Anisotropic filtering is generally fine for low-end PCs, as it has minimal impact on FPS, but you can lower it a bit if needed.

TL;DR

Anisotropic filtering is undoubtedly one of the key technologies for enhancing texture quality in 3D graphics. Rather than blurring images uniformly in all directions (like isotropic methods), AF looks at your surface angles and dynamically adapts its texture sampling to keep details razor-sharp.

Thanks to hardware support in both game engines and modern GPUs, using AF typically has only a minor impact on your framerate (especially if your rig is even remotely up to date). These days, 8x or 16x AF has become a staple for AAA games, offering an excellent balance between performance and visual fidelity.

While advanced techniques like DLSS, FSR, and various anti-aliasing methods are becoming more sophisticated, AF remains special. Its focus on handling angled surfaces sets it apart, and no other single feature delivers such a straightforward solution for maintaining texture clarity across vast, tilted landscapes.

So if you’re after the best possible visuals in your favorite games—cranking up anisotropic filtering is crucial for you. It’s far from obsolete and will likely remain a core part of rendering pipelines for years to come, keeping virtual worlds vibrant and immersive.