DLSS: In-Depth Guide to Its Performance and Graphics Benefits

|

|

DLSS (Deep Learning Super Sampling) is NVIDIA’s game-changing tech for gamers who want top-tier visuals and normal frame rates without turning their GPU into a jet engine. In this article, we’ll look at how DLSS works, what versions of the technology exist (and a brief history of its creation), how it differs from its competitors, and how to configure it properly for maximum performance and image quality.

Table of contents

- Introduction

- What Is DLSS

- How DLSS Works

- History of DLSS Development

- Advantages and Disadvantages of DLSS

- DLSS vs FSR vs XeSS

- DLSS in Practice

- How to Enable DLSS in Games

- FAQ

- TL;DR

Introduction

Supersampling methods have long been used in computer graphics to smooth out the jaggies that appear due to the discrete structure of pixels. The idea behind traditional supersampling is straightforward: render a frame at a higher resolution and then downscale it to a lower resolution. This yields smoother lines and less obvious aliasing artifacts. However, it also places a hefty load on the GPU, which has to compute a lot more pixels right from the start.

To grasp how significantly that impacts performance, consider that going from 1080p to 4K quadruples the number of pixels. That kind of load isn’t always worth it because not all those extra pixels will be critical to what you see on screen. So game developers and GPU manufacturers sought alternative ways to cheat the system: render scenes more quickly at lower resolutions and then boost the final quality using advanced algorithms.

Classic anti-aliasing methods (MSAA, FXAA, TAA) each address jaggies in their own way, but they also introduce drawbacks. Multisample anti-aliasing can be as demanding as brute-force supersampling, fast approximate anti-aliasing often blurs the image and loses details, and temporal anti-aliasing can produce ghosting or unwanted blur in motion. With the rise of GPUs capable of running machine learning workloads, the idea arose to hand off anti-aliasing and upscaling duties to a neural network, which could make smarter decisions about which details need sharpening and which don’t. This concept eventually materialized in the form of DLSS.

What Is DLSS

At its core, DLSS is an AI-powered upscaling and frame reconstruction technology designed to squeeze the most out of your GPU. It taps into NVIDIA’s tensor cores (specialized hardware designed to handle AI tasks efficiently) to process deep learning algorithms in real-time. It works only on NVIDIA RTX 20-series and newer GPUs, as they are the only ones equipped with these cores. This means your game runs at a lower resolution (1080p), but DLSS works its magic to reconstruct the image at 2K, 4K, or even unthinkable in native resolution 8K, giving you crisp visuals without tanking performance. In some cases, DLSS can make games look even sharper than native rendering by reconstructing fine details with the power of algorithms.

Here’s what DLSS brings to the table:

- Super resolution: an AI-enhanced upscaling technique that heightens clarity, minimizes jagged edges, and reduces visual noise.

- Frame generation: debuting with DLSS 3, this feature fabricates entirely new AI-driven frames, dramatically boosting frames per second (FPS). It is supported exclusively on RTX 40-series graphics cards and newer.

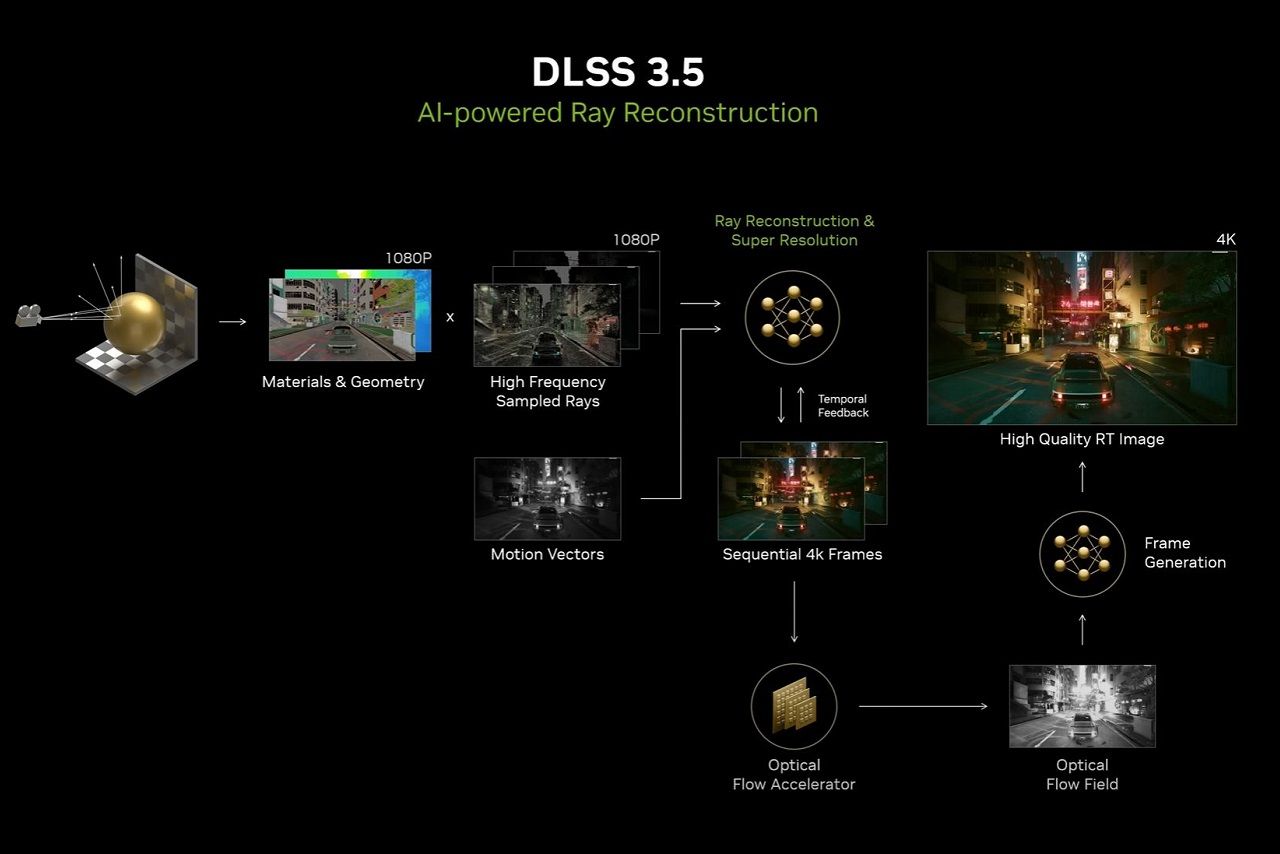

- Ray reconstruction: introduced in DLSS 3.5, this upgrade refines ray-traced lighting and reflections by replacing conventional denoisers with an AI-guided solution.

- DLAA (Deep Learning Anti-Aliasing): functions similarly to TAA by improving image fidelity, but at a performance cost, regardless of the chosen resolution scale.

DLSS 4, the most recent version introduces multi-frame generation, which creates multiple AI-powered frames for every traditionally rendered one—significantly raising FPS—though this component is limited to RTX 50-series GPUs. However, DLSS 4 still provides its improved super resolution and ray reconstruction on RTX 20-, 30-, and 40-series cards.

With each iteration, DLSS has become an essential tool for modern gaming, enabling smooth performance and stunning visuals even in the most graphically demanding titles.

How DLSS works

DLSS uses a pre-trained neural network to transform a low-resolution frame into a crisp, high-res image. Here’s the cool part: it doesn’t just look at the single frame you’re on—it also taps into previous frames to reduce visual glitches and sharpen the overall picture.

During training on NVIDIA’s servers, the network gets fed pairs of images, one in super-high resolution and one deliberately downgraded. By comparing these, it learns how to replace missing pixels and apply smoother edges. After countless comparisons, DLSS “remembers” how different textures, shapes, and colors should really look in your game.

When you enable DLSS, each frame in your game goes through a reconstruction algorithm that also uses motion vectors (they track how objects move between frames). This helps figure out what needs to be redrawn or refined. Specialized tensor cores on RTX cards handle the heavy number-crunching, which means fewer resources are needed to achieve nearly the same visual quality. As a result, a final image that’s practically indistinguishable from a native resolution render, plus a sweet FPS boost, especially in games with demanding effects like ray tracing.

History of DLSS development

DLSS 1.0 made its debut in 2018 with the Turing architecture (RTX 20-series GPUs). At that time, the technology was intriguing but far from perfect: in some titles, the image looked blurry, quick camera turns produced noticeable artifacts. DLSS also required per-game training, meaning each title needed its own special model, which wasn’t always consistent or well-optimized.

Later, DLSS 2.0 arrived with major improvements that made the technology more flexible and efficient. Instead of requiring separate training for each game, it introduced a more generalized model, allowing it to work across a wide range of titles without additional fine-tuning. The temporal upscaling process became more advanced, making better use of data from previous frames to enhance image quality. This also led to fewer visual artifacts and reduced motion blur (in fast-paced scenes too). Subsequent updates (DLSS 2.1, 2.2, 2.3) further refined the technology, introducing optimizations that improved clarity in fine details (for example, small text on textures or intricate patterns).

However, the real breakthrough announcement was DLSS 3.0, which introduced frame generation. Instead of only upscaling each rendered frame, DLSS 3.0 can generate entirely new in-between frames using AI. This can drastically increase FPS because the game engine only has to render half the frames natively while DLSS conjures the others. The flip side is that some players notice unnatural motion artifacts or slight input lag. Nevertheless, many games are embracing frame generation for its impressive bump in fluidity and performance.

Shortly afterward, NVIDIA unveiled DLSS 3.5 (adding the already mentioned ray reconstruction feature). This feature aimed at reducing unwanted artifacts by using advanced AI to handle reflections, lighting, and shading more accurately—especially in ray-traced scenes.

And the latest version, DLSS 4, introduced multi-frame generation (which has already been discussed earlier in this article) with a bunch of other improvements, all designed to further refine the technology.

Advantages and disadvantages of DLSS

Advantages of DLSS

- Significant performance gains at high resolutions (like 4K), especially useful when ray tracing is enabled.

- Detail restoration and jaggie reduction that often rivals native rendering quality.

- Ongoing evolution: each new version of DLSS refines edge handling, reduces blur, and lessens ghosting.

- Frame generation can double framerates in supported games, delivering a big boost in smoothness.

Disadvantages of DLSS

- Hardware lock-in, this tech requires tensor cores, so only RTX-series GPUs (20, 30, 40, 50) can run DLSS, with some DLSS 3.0 features exclusive to the 40 and 50 series.

- Artifacts (usually ghosting) in very fast motion, particularly with early versions or suboptimal game integration.

- Frame generation overhead may add input lag or distortion—especially noticeable in fast-paced multiplayer shooters.

- Some gamers dislike AI-generated pixels and frames and prefer native resolution for a pure image.

DLSS vs FSR vs XeSS

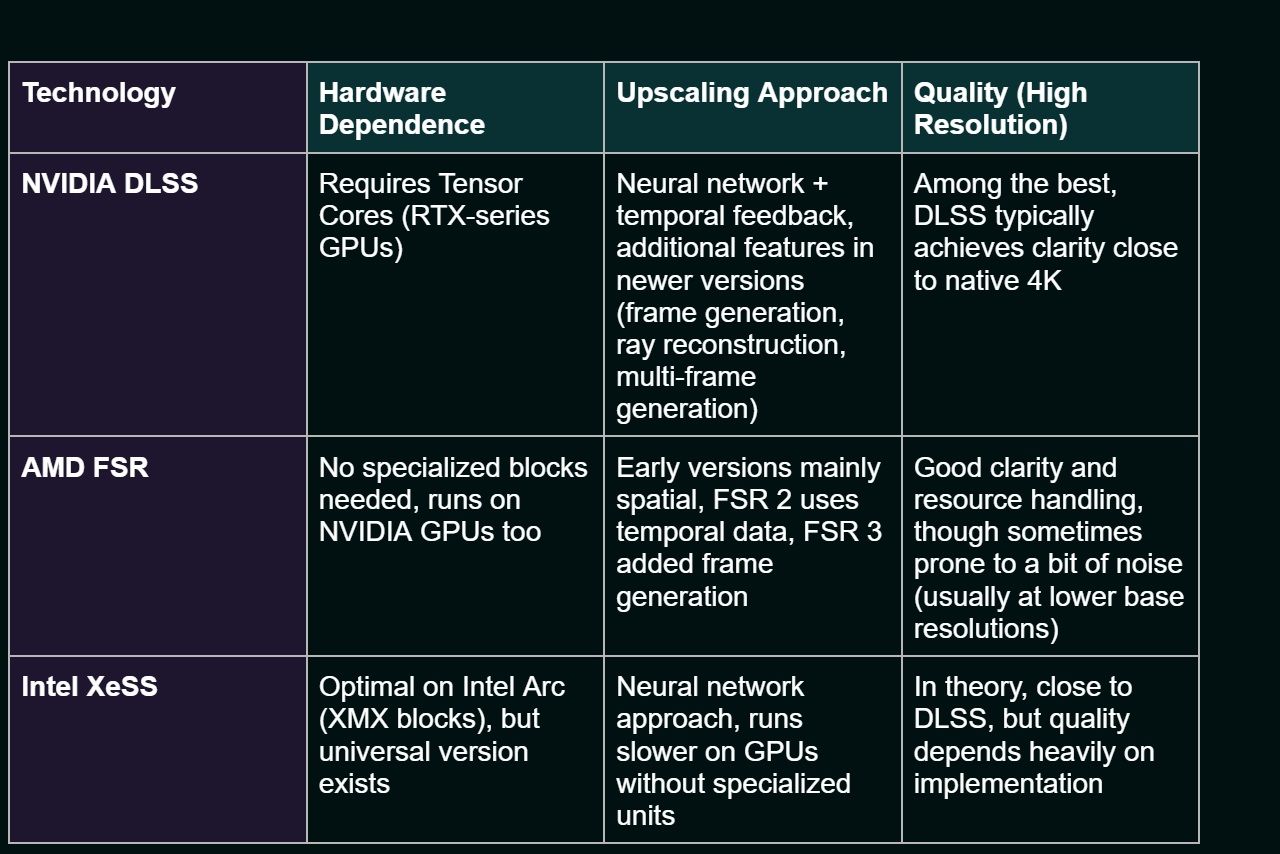

As neural network upscaling has grown in popularity, AMD and Intel have stepped into the ring with their own solutions. The table below sums up their approaches and hardware requirements.

AMD FidelityFX Super Resolution (FSR) is available in multiple iterations. The earliest (FSR 1.0) was effectively refined spatial upscaling. Later versions (FSR 2.x) incorporate temporal data, bringing them closer to DLSS in theory, though direct comparisons usually show DLSS holding an edge in dynamic scenes. FSR’s big plus is its broad compatibility across a wide range of GPUs, even older cards from both NVIDIA and AMD. FSR 3 added frame generation, and in some games, it actually works better than its DLSS counterpart.

Intel XeSS also employs machine learning and can run in two modes. One leverages specialized XMX blocks in Intel Arc GPUs, while the other relies on more standard shader math for universal use on different cards. Performance and final image quality vary depending on the specific optimization and XeSS version, but the aim is to rival DLSS in both upscaling and anti-aliasing.

DLSS in Practice

To really feel what DLSS brings to the table, it helps to look at performance in actual gameplay. Titles notorious for their GPU demands (especially those using ray tracing) are excellent test cases.

- For a long time, Cyberpunk 2077 was a go-to benchmark for graphics cards. With ray tracing maxed out in 4K, even heavyweight GPUs might manage only suboptimal 25-40 FPS in certain scenes. But with DLSS set to Quality, the game often jumps to 60 FPS or higher. Switching to Performance mode can raise FPS further, though some players report mild artifacts or a softer look to distant objects.

- Developed by Remedy, Control is packed with atmospheric effects and reflection-heavy environments, making it tough on hardware. DLSS here can bump a sub-60 FPS rate at 2K to a comfortably high framerate, often well above 60. The technology also tends to reduce graininess you sometimes see on reflective or particle-heavy surfaces.

- Metro Exodus uses ray-traced reflections and global illumination that chew through GPU resources. DLSS helps it maintain around 60 FPS at 4K on higher settings (notably in the Enhanced Edition). Without DLSS, framerates can sink below 40.

- A Plague Tale: Requiem and Portal RTX both make extensive use of frame generation. It can roughly double the framerate (jumping from around 30 to 60 FPS or more), but some players report minor artifacts.

- Remnant II has high system demands and also benefits significantly from DLSS. With modes like Quality or Balanced, the game can often stay at or above 60 FPS, whereas without upscaling it may drop to 40 or lower.

Despite minor graphical issues, the overall clarity and performance gains often make it preferable to pure native rendering.

How to enable DLSS in games

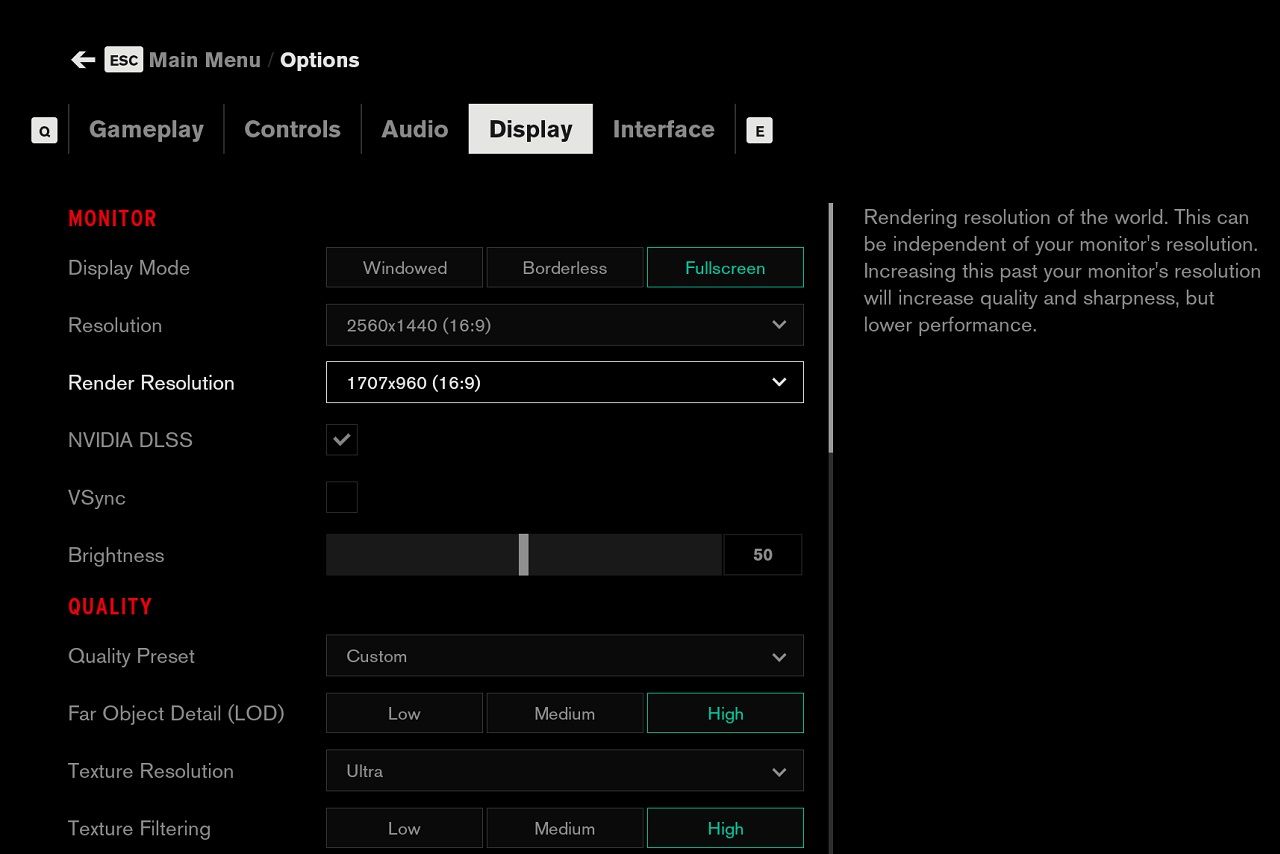

If you want to give DLSS a shot, head over to the “Video Settings” or “Graphics” section of any supported game. There’s usually an option labeled “NVIDIA DLSS” or “DLSS Super Resolution”. In titles that support DLSS 3.0 or higher, you may also see a “Frame Generation” toggle.

Most games offer four modes: Quality, Balanced, Performance, and Ultra Performance. If you’d like to keep top-tier detail while still enjoying a decent FPS bump, go with Quality or Balanced. Performance or Ultra Performance lower the base resolution more aggressively, which can yield a massive performance boost, but may look softer on distant scenery or fine details, or worse than native in general. For very resource-intensive scenes, these modes can be a lifesaver to maintain smooth gameplay.

It’s wise to keep your NVIDIA drivers current, NVIDIA regularly releases optimizations that enhance DLSS integration for new titles and address known visual glitches. Some engines (particularly Unreal Engine) blend DLSS with other anti-aliasing methods like TAA. Implementation varies, so the final look depends on how the developer sets it up. Sometimes TAA is hardcoded and simply layered under DLSS, which might or might not be ideal.

Because DLSS relies on tensor cores, it’s limited to RTX GPUs (20-50 series). Owners of GeForce GTX or non-RTX cards can’t directly enable DLSS, though they may turn to FSR or XeSS (if supported).

FAQ

DLSS meaning—what does it stand for?

It stands for Deep Learning Super Sampling.

What does DLSS do?

DLSS in NVIDIA RTX GPUs enhances gaming performance by generating high-quality upscaled frames using deep learning and AI-driven reconstruction.

Does DLSS improve FPS?

Yes, how DLSS improves performance is by rendering frames at a lower resolution and using AI to upscale them, resulting in higher FPS without significant visual loss.

Should I enable DLSS?

If you want better performance without sacrificing much visual quality, enabling DLSS is a great option, especially at higher resolutions.

What is DLSS 4?

It’s the latest iteration of the tech, which introduced multi-frame generation, potentially resulting in even better performance.

TL;DR

DLSS emerged in response to growing demands for ultra-high resolutions and visually stunning effects (like ray tracing), things that once seemed too heavy for home gaming rigs to handle smoothly. By combining neural upscaling with temporal data, DLSS offers image quality that’s often close to native resolution, all while delivering a major boost in FPS.

Yes, the technology has limits (hardware exclusivity and occasional artifacts in certain scenes). However, for most modern AAA titles, DLSS strikes a remarkable balance between performance and fidelity.

Comparisons with FSR and XeSS indicate that all three solutions are rapidly advancing in the realm of neural network reconstruction, with AMD quickly closing the gap. AMD’s FSR 3 has matured significantly with temporal techniques and frame generation, and with the recent announcement of FSR 4 we can expect more changes and improvements. While Intel’s XeSS is designed to be competitive, it is lagging behind its competitors for now. AMD and Intel have time to improve, while NVIDIA is occupied with issues surrounding the 50-series RTX GPUs.

Ultimately, the choice between pure native rendering and smart upscaling is trending more and more toward upscaling. Traditional 4K computed manually may still be ideal in theory, but if you’re striving for high framerates and advanced effects, it’s tough to ignore the significant resource savings DLSS can offer. That resource headroom often means the difference between barely playable and a fluid, immersive experience.

Looking further down the road, we can expect even more hybrid approaches—ones that seamlessly switch between resolution levels, integrate advanced spatial/temporal data, or even leverage the full 3D geometry of the scene. In theory, these approaches will let games look more lifelike than ever without demanding unreal hardware specs.

Right now, DLSS stands as a leading solution for bridging top-notch image quality and excellent performance. If you have an RTX GPU, flipping DLSS on can immediately reward you with smoother gameplay and sharper visuals. Those on non-RTX cards can explore FSR or XeSS, which work in similar ways despite different algorithms and hardware demands. So, if you’re jumping into Cyberpunk 2077, Control, Remnant II, or any other blockbuster game with an upscaling option, give it a shot. You’ll likely be impressed—and your GPU will appreciate it too.