History of Anti-Cheats: From Manual Checks to Kernel & Secure Boot

A new wave of modern anti-cheats has strict requirements for PC. Battlefield 6 with Javelin AC won’t launch without Secure Boot enabled. Activision includes a phased rollout of TPM 2.0 and Secure Boot, making them mandatory for Call of Duty: Black Ops 7. PUBG rolls out its own kernel-based solution that checks code at the deepest level when the client starts.

The price of this approach is compatibility and convenience:

- Players have to go into the BIOS and change the UEFI settings.

- Driver incompatibility and false positives.

- Even anti-cheat conflicts are possible (like the recent case of Vanguard from Valorant vs Javelin from BF6).

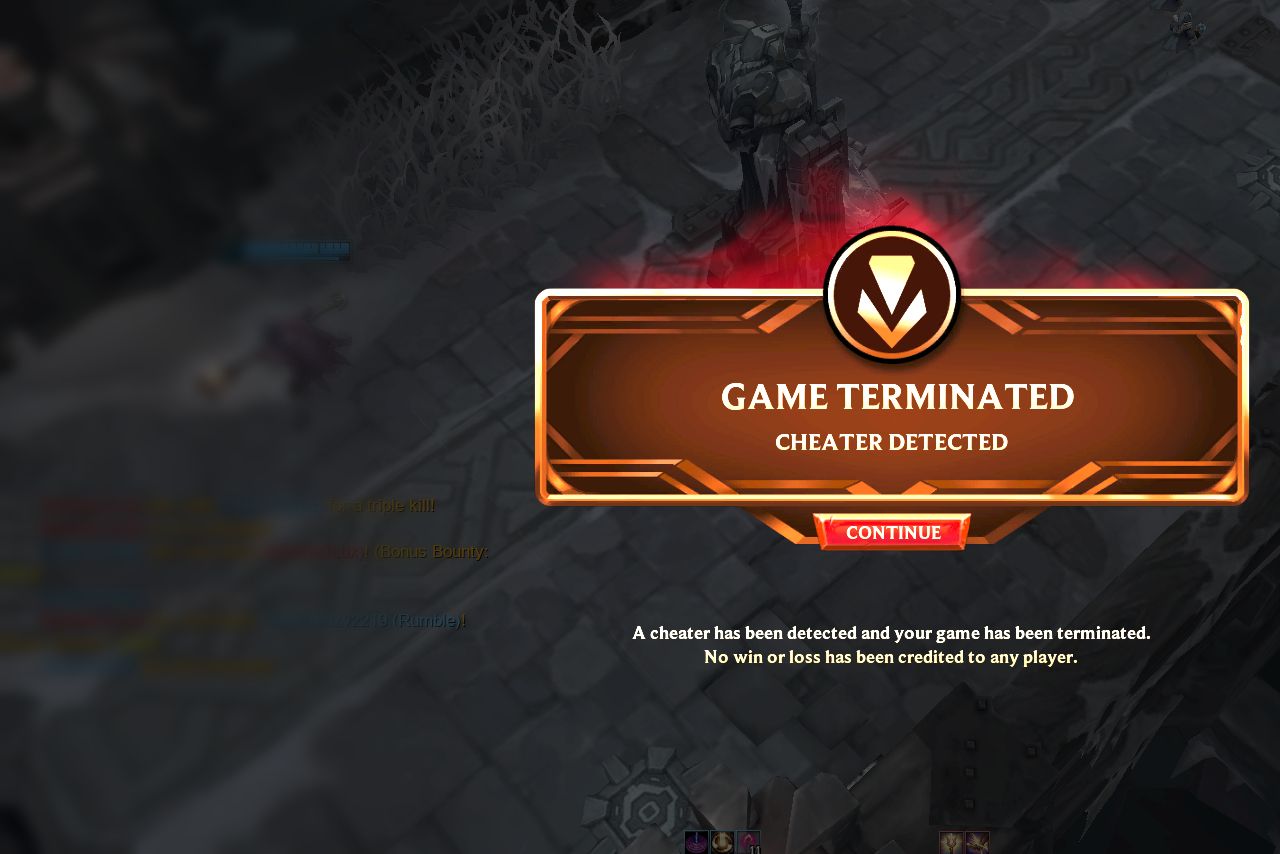

Anti-cheats are a necessary evil. Without them, ratings lose their meaning, matches turn into a lottery, and multiplayer games quickly become overgrown with cheaters. In 2025, the gaming industry has shifted to system boot checks, driver certification, and kernel-level anti-cheats.

Why Anti-Cheats Are Necessary in Online Games

Multiplayer titles with PvP or competitive sides quickly degrade if they do not have adequate protection against cheaters. Unfair advantages break the matchmaking balance, destroy trust in rating systems, and demotivate honest players from coming back. This directly affects retention, monetization of F2P games, and the eSports scene, where the quality and integrity of competition determine the value of a contest.

For studios and publishers, it is not only reputation that is at stake. The cost of moderation, investigations, and appeals, support for ban wave infrastructure, legal risks, and platform requirements—all these factors turn anti-cheat into a fundamental component of a live service game. At the same time, any tightening of protection comes with a price: the risk of false bans, privacy and compatibility issues, and potential conflicts with drivers, various programs, or even other games.

This article is part 1 of a series. Here, we will trace the historical evolution of anti-cheats and key turning points. In part 2, we will analyze the principles of operations and modern methods of anti-cheats, discuss risks, practical advice for players, and requirements for a trusted execution environment. In part 3, we will examine the cheat industry, which has expanded from a niche home-made production to a sizable market targeting specifically RMT and eSports, not just regular players.

Anti-Cheats History: Manual Moderation and Early Integrity Scans (1990s)

The first mass online shooters (Quake, Half-Life, and Counter-Strike) were born in the era of dial-up and a trusted client model. The simplest wallhacks and aim scripts changed the client’s behavior or rendering: texture/shading substitution (turn up transparency on the wall texture, and you got a wallhack), config tweaks, and primitive injects into process memory. Most servers were managed by the community, not the central security service. Some server-side rules existed but were fragmented and inconsistent across communities.

Moderation was based on enthusiasts: admins maintained local ban lists, accepted demo recordings from players, and analyzed controversial plays and issues manually. Developers patched exploits, after which new workarounds appeared quickly—a classic arms race without a single industry standard.

Technical limitations of the time aggravated the situation: weak server authority, lack of continuous telemetry, and modest resources for background checks. Anti-cheat as a separate product practically did not exist; security was a set of point checks and rules, heavily dependent on a specific game engine and the community around the game.

Third-Party Anti-Cheats and Client-Side Scanners (2000s)

In the early 2000s, the market for anti-cheat as a product was formed. PunkBuster (Even Balance) became the de facto standard for shooters (Battlefield series, early Call of Duty games), and nProtect GameGuard for MMOs (Lineage II, etc.). At the same time, Valve’s VAC was strengthened: it was transformed from a set of checks into a system service at the Steam level for the CS franchise and Team Fortress 2.

The technical approach of these years was mainly signatures and integrity:

- Checking hashes of game files and libraries.

- Searching for known signatures of injects and overlays.

- Basic heuristics (unnatural values of variables, prohibited hooks).

- Detection of debugging utilities and memory readers.

Ban waves (delayed bans) became a tactic, popularized by VAC: the system records violations, but applies punishments in batches after some time passes. This deprives cheat developers of quick feedback and makes moderation cheaper for publishers, but introduces additional annoyance for players.

The social context is also changing. Modding is on the rise, LAN clubs are thriving, and the first wave of commercial cheat sites is emerging. On the publishers’ side are CD-key bans and global block lists, which reduce the value of reselling keys and stimulate sales of new game copies. The downsides of the time were the rise of false positives (due to aggressive scanners) and frequent conflicts with drivers/overlays.

Live-Service Anti-Cheats, Telemetry, and Behavioral Detection (2010s)

By the middle of the decade, the video game industry shifted its focus to anti-cheat as a service. Easy Anti-Cheat (EAC) and BattlEye (BE) offer ready-made SDKs, cloud analytics infrastructure, and regular signature/detection updates. Games no longer need to build protection from scratch because integration comes down to connecting the service and setting up server policies. Smaller anti-cheat software companies were integrated into larger ones or failed.

The growth of F2P and eSports changes priorities: the focus is shifting to server telemetry and behavioral analysis. In addition to client checks (injects, hooks, anti-debug, anti-tamper), the following are widely used:

- Statistics of targeting and reaction time, distribution of headshots, and cursor micromovements.

- Comparison of input and game result (deterministic checks, server authority).

- Correlation of anomalies by account, hardware, and network parameters.

- Automated appeals and escalation of specific cases to moderators.

Market for cheats is on the rise

In parallel, the fight against private cheats is underway: obfuscation of client anti-cheat modules is increased, HWID spoofers are detected, and measures against driver injections in the user space are tightened. Anti-cheat is increasingly working in conjunction with anti-tamper/DRM (for example, to protect against the substitution of executable modules and import tables), and integrity checks are moved to the boot and early initialization stage.

But the progress has its price: more complex and resource-heavy netcode, compatibility conflicts, and rare but painful false bans for honest players (due to social overlays, mouse macros, or non-standard input device drivers).

The result of the decade: anti-cheat is turning into a permanent service with a hybrid architecture (client plus server plus cloud analytics). This base will enable developers to move towards the kernel level and a trusted execution environment in the 2020s.

Kernel-Level Anti-Cheats, Hypervisors, and Secure Boot Requirement (2020s)

In the 2020s, the industry shifted from user detection to kernel-level. When cheats infiltrate drivers and hypervisors, anti-cheat at the application level fails to detect them. The first anti-cheat to change the rules was Vanguard in Valorant from Riot: players are required to enable Secure Boot for a persistent driver that starts with the OS and tracks low-level interventions even before the game begins. The approach is controversial due to access to the kernel and possible conflicts with software, but it is this that covers the class of hidden injectors and spoofers that previously remained out of sight.

But Javelin in Battlefield 6 was the turning point. After Dice announced that the game won’t launch without Secure Boot enabled, Call of Duty and PUBG: Battlegrounds quickly followed with their own kernel-level solutions.

At the same time, the requirements for the trusted execution environment are strengthened. Secure Boot, TPM, and VBS/HVCI form a chain of measured loading and isolation, reducing the chances of launching unsigned drivers and network sniffers. For several projects, the presence of such mechanisms has become a launch predicate: if the OS, drivers, or loading configuration is not confirmed, matchmaking is not available. On Linux via Proton/Steam Deck, the situation is mixed: EAC and BattlEye can work in this environment with specific settings, but you can’t enable Secure Boot here.

Custom anti-cheats evolve into full platforms

For large publishers, anti-cheat becomes part of the platform, not a plugin. Behavioral models and ML detection for input telemetry, accuracy, and patterns, anti-tamper for protecting executable modules from substitution, and cloud analytics that correlate anomalies at the level of accounts, hardware, and networks work in a single stack. Against this background, disputes persist, as users seek clarification on which processes and drivers are scanned and how long telemetry is stored, while developers remain non-disclosing of detection details.

Practical issues also persist, including rare but noticeable FPS drops, input lags, or network instability, conflicts with peripheral drivers, overlays, and recording software. The line of transparency is where the explanation of the reasons for blocking does not yet give cheaters ready-made tips for bypassing.

The Cheat Economy

By the middle of the decade, the cheat market split into two niches. Mass public cheats are cheap, quickly released for popular games, poorly obfuscated, and short-lived. Waves of bans and legal pressure through domains and payment gateways regularly cover them.

Private cheats are an order of magnitude more expensive, encompassing a subscription model, whitelisting of clients, custom builds for specific games, patches, or tournaments, heavy obfuscation of bootloaders, drivers, and even hypervisors, HID input emulation, and HWID spoofing. The audience is narrower (with focus mainly on RMT and eSports), LTV is higher, and the invisibility window is longer.

The industry’s response has become more complex:

- Delayed ban waves break fast feedback to cheat developers.

- Honeypots in memory and network provoke incorrect actions of injectors.

- HWID bans and device clustering complicate returns.

- Legal actions and payment sanctions burn out the development and sales infrastructure.

- Shadowbans and quarantines isolate suspicious players without immediate notification.

As a result, the share of regular cheats decreases, while the shift goes to expensive private solutions and professional cheaters, who have made it their everyday work. Bans become less frequent, but thicker: with a deep correlation of telemetry, HWID, and network patterns, which makes a recovery upon re-registration noticeably more difficult. For genuine players, this means potentially fewer obvious cheaters in matches, but also stricter requirements for the environment, while false positives are still possible.

Future Trends for Anti-Cheats

Deeper integration into PC

Anti-cheats will shift towards the hypervisor level and hardware attestation of the environment: measured boot, trust tokens via TPM, driver, and module configuration checks before the game starts. The idea is to provide the game with a trust pipeline to block driver and hypervisor cheats before they are initialized. Where possible, checks will move to secure isolation (VBS/HVCI and similar).

More server authority

Key logic will move to the server once again: stricter determinism checks, hit registration and physics validation, and telemetry comparison with simulation. This reduces the value of client exploits, but requires careful latency management and high-quality netcode, or, in short, more computing resources.

Complex input analytics and behavioral models

Consolidation of ML approaches: analysis of aiming trajectories, mouse microfluctuations, HID timings, and human error. For anti-bot: recognition of cyclicity and macros; for anti-aim: assessment of the likelihood of a target and the consequences of a shot in the dynamics of a match. In theory, the quality of detection will increase as appeals become more transparent.

Transparency and control of collected data

The demand for audits is growing, raising questions about the types of data collected, their storage duration, and the structure of appeals and unbanning processes based on evidence. We can only hope for privacy settings on the player’s side (management of overlays, telemetry, logs), public reports on false bans, and formal procedures for independent audit.

Cross-platform issues

Support for Linux/Proton/Steam Deck will be expanded, provided that a Secure Boot equivalent appears for Linux and is compatible with the anti-cheat stack. The growth of trust modes is likely, with full access (rating playlists) available only if platform requirements are met, and simplified queues for less strict environments.

More legal actions

In the coming years, legal pressure on cheat developers and sellers will only intensify: major publishers will lean on anti-circumvention and unfair-competition claims while squeezing the supply chain: domains, hosting, payment processors, and ad networks. We can expect collaborations between large publishers.

Summary

The history of anti-cheats is a constant balance of honesty, privacy, availability, and performance. We have moved from manual moderation and point server checks to platform-based, deeply integrated stacks that combine a client, server, and cloud analytics.

The vector is obvious: more trusted infrastructure, more server authority, and more explainable moderation procedures. The winners in the video game industry will be those who deliver proper security without taxing performance or eroding player trust.

In the following articles, we will analyze how exactly modern anti-cheats work: threat models, client/server methods, the role of the kernel-level and Secure Boot, sources of false bans, and practical advice to regular players; the other article will take a look at the cheat market and RMT.