GPU Guide: History, Architecture, Performance, and Buying Tips

|

|

A computer game is a complex simulation of a whole world, and the main translator of numbers into a picture is the graphics processing unit (GPU). It takes care of the 3D computer graphics operations that a universal central processing unit (CPU) would handle too slowly: complex geometry, lighting calculations, texture mapping, and post‑effects. Without a powerful GPU, modern gaming would either turn into a slideshow or be forced to simplify visuals dramatically.

Let’s look at:

- How graphics cards have evolved to their current state.

- What’s inside a modern GPU, and what happens during the rendering of a frame.

- Which GPU specs matter more in practice, and how to choose the right GPU for your needs.

- Common questions and issues related to the GPU.

- What we can expect in the near future.

A Brief History and Evolution of the GPU

- 1990s. The first 3D accelerators could output textured triangles, and to enable fog or transparency, you often had to select a specific mode for the card in the driver. Early 3D cards lacked transform (producing a 2D view of a 3D scene) and lighting (changing colours of surfaces based on the lightmap) until the NVIDIA GeForce 256 (1999).

- 2000s. The advent of programmable shaders turned the GPU into a small supercomputer where the developer writes mini‑programs in High-Level Shader Language (HLSL) or OpenGL Shading Language (GLSL). From that moment, it became possible to create custom lighting and textures, or any other special effects. Early shaders were limited; full programmability matured with Shader Model 2 around 2004.

- 2010s. The era of unified computing units and GPGPU (general-purpose computing on graphics processing units—simpler put, using GPU for computing tasks, previously handled by CPU) arrived. A video chip was no longer just an artist—its cores were also used for calculating physics, accelerating renders, and machine learning algorithms. NVIDIA’s Fermi (2010) was a major milestone for GPGPU, but some earlier GPUs (AMD R600) also supported computing.

- 2020s. Graphics faced exponential growth in resolutions (4K and above), frame rates (240+ Hz), and complex lightning tech. To feed displays with gigapixels, architects introduced tensor accelerators (DLSS for Nvidia GPU/FSR for AMD GPU and supported/XeSS for Intel GPU and supported), hardware RT blocks, and multi‑level caches. Yes, DLSS launched in 2018 (RTX 20-series), but the 2020s saw the best refinement (DLSS 3.0, 3.5, and 4.0; FSR 2.0 and above; XeSS).

In less than thirty years, the demand for acceleration has increased thousands of times—GPUs have had to boost parallelism and invent new ways to overcome limits on power and heat.

The Architecture of a Modern GPU

Streaming multiprocessors

Inside the die are dozens or hundreds of identical clusters (called Compute Units for AMD and Streaming Multiprocessors for NVIDIA). They are somewhat similar to the cores of a CPU. Each cluster contains:

- ALU cores for vector‑ and matrix‑math.

- Texturing units for sampling and filtering textures.

- FP or INT cores for floating‑point and integer operations (some cores are FP+INT fused, depending on the architecture).

- Tensor cores for AI/ML calculations and RT cores for ray tracing (both called matrix cores).

- Local caches and register files.

The idea is simple: run one instruction simultaneously on thousands of pixels—Single Instruction, Multiple Threads (SIMT)—while another batch processes neighboring triangles.

GPU memory and bus

Video random access memory (VRAM)—an independent memory pool of the GPU. GDDR6X or HBM3 delivers hundreds of GB/s of bandwidth. HBM offers higher bandwidth but lower capacity, while GDDR6X is cheaper and more common.

L1/L2/L3 caches—reduce access latency to reusable graphics data (shadows, lightmaps, index buffers, etc.). Only some GPUs have L3, while others have bigger L2.

PCIe bus—the channel through which the CPU sends commands and receives finished frames. Modern systems use Resizable BAR so that entire buffers reach the processor without fragmentation. PCIe bottleneck—VRAM is too fast even for modern PCIe, so minimizing CPU-GPU transfers is critical.

Frontend and task scheduling

The command processor analyzes the order of API calls, forms bundles of draw calls, and schedules them onto clusters. While waiting for data, the GPU doesn’t sit idle: thanks to the hardware scheduler, overlapping work proceeds—ray tracing, texture decompression, and tensor upscaling running in parallel with pixel shaders.

The Frame Rendering Pipeline in Games

- CPU logic—gameplay, AI, physics; output: transformation matrices, light positions, render commands. Modern engines use multithreaded command recording.

- Vertex stage—the GPU converts vertices into screen space, applies animation, bone skinning, and morph targets. If tessellation is used, vertex shading may run twice. Modern pipelines (mesh shaders) can skip traditional vertex shading entirely.

- Tessellation/mesh shaders—dynamically subdivide large polygons, adding geometry where the player sees detail and saving it in the distance.

- Geometry culling and clipping—triangles hidden by walls or lying outside the POV are discarded, saving 50-80% of work.

- Rasterization—turns triangles into fragments (potential pixels).

- Pixel shaders—for each fragment, compute color: sample textures and normals, then mix light, shadows, and reflections.

- Ray tracing (hybrid pass)—calculates specular reflections, global illumination, and soft shadows using BVH trees.

- Post‑effects—bloom, depth‑of‑field, motion blur, HDR toning.

- Compositing and output—the final image goes to the frame buffer; at the same time, tensor cores can upscale it to 4K, saving render time.

The whole chain executes in 6-12 ms to hit 120+ FPS—the GPU operates under brutal time constraints.

More about pixel shaders

Anti‑aliasing and ambient occlusion—before a frame goes any farther down the pipe, the GPU often runs two subtle but crucial image‑quality passes. Anti‑aliasing smooths jagged polygon edges. Ambient occlusion darkens crevices and contact areas where light is naturally blocked. Although both effects seem cosmetic, they greatly enhance perceived realism and demand additional bandwidth and ALU time, so GPUs execute them in parallel with other pixel operations whenever possible.

What Affects GPU Performance in Games

- ALU density—more cores mean more pixels and vertices processed in parallel.

- Core frequency—higher clocks improve responsiveness but demand robust cooling.

- VRAM width and speed—4K textures and occlusion maps weigh multiple gigabytes; if they don’t fit in memory, swapping to SSD starts and FPS plummets. If their transfer speed is too slow, FPS drops as well.

- RT cores—hardware ray tracing scales almost linearly with their number. Hybrid rendering has diminishing returns.

- Tensor cores—power DLSS and similar tech, frame generation, and more.

- Engine optimization—Unreal, Unity, Frostbite, etc., each engine divides work between graphics and compute pipelines differently; a well‑tuned profile can double performance on mid‑range hardware.

Refresh rate

Refresh rate matters, too. While resolution tells you how many pixels the GPU must render, refresh rate dictates how many times per second the monitor can display a new frame. Driving a 144 Hz or 240 Hz panel means the graphics card has just 6.9 ms or 4.2 ms, respectively, to finish every full pipeline pass. If the GPU misses that window, the display shows an older frame, causing stutter or tearing.

Modern GPU Features and Innovations

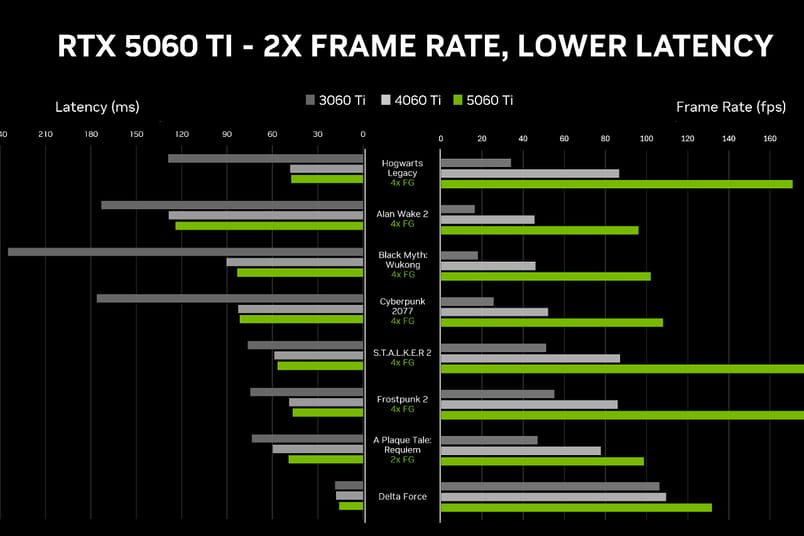

- Next‑gen upscaling—DLSS 4 and FSR 3 and higher use motion vectors and neural nets to predict missing pixels, greatly increasing performance without visible loss of clarity.

- Frame generation—the GPU renders frame A, an AI predicts frame B; FPS nearly doubles while latency stays below 10 ms. Multiframe generation exists via DLSS 4, but not a lot of games are supported, and performance gains may vary.

- Intelligent denoising—tensor blocks scrub grain from ray‑traced passes in half a frame.

- AV1 hardware codec—streaming via OBS or NVIDIA Share/ShadowPlay no longer taxes the CPU, and bitrate drops about 30% with no quality loss.

- Asynchronous computing—tech that lets lightweight computations run alongside the pixel rendering pipeline.

- On‑the‑fly texture compression—modern formats tore tens of gigabytes without overflowing VRAM.

How to Choose a GPU for Yourself

Define your goal

- Competitive games at 1080p/144+ Hz—look for a mid‑range GPU (Nvidia RTX 3060 Ti, for example) with low latency, high core clock speeds, and strong 1% low frame times. For you, consistency will matter more than raw power.

- QHD AAA-gaming at 60+ FPS—prioritize RT cores and at least 16 GB of VRAM to handle ray tracing, high-resolution textures, and quality effects (AMD Radeon RX 7900 GRE is a good option). Frame generation (DLSS 4 or FSR 3+) support is a big advantage.

- VR headset or 4K—you’ll want 20+ GB of VRAM, excellent cooling, and AV1 hardware encoding/decoding support in case of streaming or VR (NVIDIA RTX 4090 Ti should be sufficient). High memory bandwidth (GDDR6X or HBM) is essential for large-scale assets.

Check system components

- PSU. Modern flagship GPUs can demand 500-1000 W or even more in peak workloads and 12V-2×6 or 16-pin power connectors.

- Case and cooling. Triple-fan, 3.5-slot GPUs can exceed 320 mm in length and 150 mm in thickness. Double-check your case clearance and airflow.

- CPU. It’s not GPU vs CPU; balance matters. Be sure that your processor isn’t the bottleneck; check different GPU benchmarks.

Look at real‑world tests. Focus on:

- 1% low FPS for smoothness.

- Power draw and thermals under load.

- Noise levels.

- How well the GPU handles actual games you plan to play.

If you’re not upgrading often, investing in a top-tier card now may give you better value in the long run. There’s no singular best GPU for gaming, it depends on your needs.

FAQ

What is GPU, and what does GPU stand for?

GPU stands for Graphics Processing Unit—the specialized hardware designed to handle graphics rendering, visual effects, and parallel computations.

What is a normal GPU temp?

Normal idle temperatures range from 30-50°C, while under gaming or load, 65-85°C is typical. Some GPUs can safely reach up to 90°C, but cooler is generally better.

How to check GPU temp?

You can monitor GPU temp via the Performance tab in Task Manager in Windows (for basic info) or using various tools (MSI Afterburner, AIDA64, HWMonitor, GPU-Z, and more).

What is an integrated GPU vs a dedicated GPU?

- Integrated GPU. Built into the CPU, uses system RAM, lower performance.

- Dedicated GPU. A separate graphics card with its own VRAM, much better for gaming and 3D workloads.

What GPU do I have/what is my GPU?

On Windows, go to Device Manager > Display Adapters. Third-party apps like GPU-Z also work.

How to update GPU drivers?

- NVIDIA. Use Nvidia App or download and install from nvidia.com.

- AMD. Use AMD Software: Adrenalin Edition or visit amd.com.

Summary

A modern GPU is far more than a luxury add‑on for pretty graphics. It is the parallel workhorse that turns mathematical scene data into the 120+ FPS we now expect. From its origins in the 1990s—fixed‑function cards that could merely texture a triangle—graphics hardware has evolved through programmable shaders, unified compute, and today’s ray‑tracing and AI accelerators. Over three decades, the demand for computer graphics has risen by orders of magnitude, and every architectural advance (larger ALU arrays, high‑bandwidth GDDR6X or HBM3, multi‑level caches) exists to keep the frame‑rendering pipeline within the shortest time possible.

Inside each GPU sit dozens of units that blend vector ALUs, texture units, RT cores, and tensor cores. Geometry is culled, rasterized, shaded, denoised, upscaled, and finally composited—often in parallel—before a frame is scanned to the display. Performance depends on more than raw teraflops: VRAM capacity and speed prevent swaps to other memory types, RT cores dictate ray‑tracing throughput, and refresh‑rate targets shrink the time budget per frame. Balanced power delivery, adequate case clearance, and a CPU that can feed the card are equally critical, with real‑world benchmarks to help you choose the right GPU.

Looking ahead, GPU development is expected to continue along familiar paths: improved efficiency, smarter use of memory, and gradual architectural refinement. Technologies like chiplet designs and stacked memory are becoming more common, aiming to balance performance with power consumption. AI features, upscaling, and frame generation are likely to become more widespread and better integrated into both hardware and game engines. Meanwhile, interest in external and hybrid solutions—especially for mobile and compact setups—is also growing.

While it’s impossible to predict every shift in GPU design, one thing is certain: as games evolve, so too will the demands placed on graphics hardware. Choosing a GPU today is not just about benchmarks, but about ensuring the feature set aligns with your use case—and leaves some room for the next generation of experiences.